Looking to personalize your ChatGPT experience? Customizing ChatGPT to work on your data is the way to go!

ChatGPT has transformed the way people interact with AI-powered bots. Imagine teaching a chatbot the language of your industry, understanding your customers’ needs, and embodying your company’s distinct voice. Don’t worry, it’s simpler than it seems!

With the right elements, such as high-quality data, a bit of technical skill, and a portion of patience, you can craft a bespoke ChatGPT that mirrors your business. So, let’s roll up our sleeves and delve into a guide on how to train ChatGPT on your own data.

Understanding the Basics: ChatGPT and ChatGPT Training Data

Understanding the basics of ChatGPT and its training data is essential before embarking on your data training journey with ChatGPT.

What is ChatGPT?

ChatGPT, an advanced conversational AI agent from OpenAI, employs deep learning methods to comprehend and produce text responses akin to human speech. With training data drawn from a wide array of sources including books, articles, and websites, ChatGPT boasts a broad knowledge base encompassing diverse subjects. Users can engage with ChatGPT for different inquiries, discussions, or creative assistance.

Key limitations of the base ChatGPT model

Although ChatGPT is a powerful conversational AI, its core version still faces several important constraints that become noticeable in real-world use:

-

Lack of domain-specific knowledge. The base model often struggles with specialized topics outside its training data, sometimes offering broad or inaccurate explanations.

-

No real-time updates. Since it can’t access current information, the model may miss recent events or changes unless integrated with external tools or advanced chatbot development frameworks.

-

Inconsistent long-form communication. Over extended conversations, ChatGPT can lose track of context or even contradict earlier responses.

-

Difficulty with nuanced prompts. Subtle or multi-layered instructions can confuse the model, leading to incomplete or off-target answers.

-

No memory of previous interactions. Without dedicated memory features, each conversation starts anew, making it hard to maintain continuity or personalization.

These limitations explain why many teams are exploring how to train ChatGPT for more specialized applications. Businesses often choose to train a custom GPT model, train ChatGPT on custom data, or train GPT on your own data to achieve more relevant and accurate results.

Understanding how to train ChatGPT on your own data helps transform a general AI model into one that genuinely mirrors a company’s domain expertise and communication style.

How training СhatGPT with your data can be a game-changer?

What sets ChatGPT apart is its ability to be further trained on custom data, allowing users to convey domain-specific knowledge and context. This means you can tailor ChatGPT’s understanding to match your industry or interests.

For instance, in healthcare, ChatGPT can be trained on medical literature to provide more accurate information, while in finance, you can train ChatGPT on financial reports and market trends.

The benefits of this custom training are substantial. Firstly, it enables ChatGPT to acquire deep expertise in your chosen domain, enhancing the accuracy and depth of its responses. Additionally, it improves ChatGPT’s ability to comprehend the nuances and context of conversations, leading to more relevant and personalized interactions. Moreover, custom training provides users with greater control over ChatGPT’s responses, allowing for fine-tuning to meet specific requirements and preferences.

Why train a chatbot on your data?

Quick answer: To achieve a more accurate and relevant interaction, improving the overall user experience.

By using your data, you can make sure that the chatbot understands and addresses specific questions, concerns, and topics relevant to your customers. This customization enhances the chatbot’s accuracy and relevance, leading to more satisfying interactions. Additionally, training on your data allows you to maintain control over the chatbot’s knowledge base, ensuring that it reflects your company’s expertise and values.

Read our comprehensive guide on API integration

How to Train Chat GPT on Your Data: A Step-by-Step Guide

Training ChatGPT on your specific data set unlocks the potential for personalized AI interactions. Let’s take a look at the steps you need to take to tailor ChatGPT's responses and capabilities to your unique requirements.

Turn to Python to train ChatGPT with custom data

Note: Training ChatGPT on custom data requires some coding knowledge and experience in Python. You will need to write Python code to interact with the OpenAI API, process your custom data, and manage the training process. Thus, being familiar with concepts like data preprocessing, API requests, and basic Python programming is essential for this task.

To train your own ChatGPT using OpenAI’s API, you’ll need to ensure you have the following prerequisites in place:

Install Python:

Ensure your computer has Python 3.0+ installed. Visit the official Python website to download Python and follow the installation guidelines for your operating system.

Upgrade Pip:

Pip, a Python package manager, enables the installation and management of Python packages. Make sure you have the recent version of Pip installed. If you download the newest Python version, it already includes Pip. If you are using an older version, you can upgrade Pip using the following command in your terminal or command prompt:

Obtain an OpenAI API Key:

You’ll need an API key from OpenAI to access their GPT API. If you don’t already have an API key, you can sign up for access on the OpenAI website and click the "Create new secret key" button to collect your API key.

Install essential libraries

To install the necessary libraries for training ChatGPT on custom data, including OpenAI, GPT Index (LlamaIndex), PyPDF2, and Gradio, you can use the following commands in your terminal or command prompt:

OpenAI Library:

This library provides access to OpenAI’s GPT API, allowing you to interact with the model, train prompts, and receive responses. You can install it using Pip:

GPT Index (LlamaIndex):

GPT Index, also known as LlamaIndex, is a tool that allows for efficient indexing and searching through large datasets. It helps handle and analyze substantial amounts of text data during training. You can install it using Pip:

PyPDF2:

PyPDF2 is a Python library designed for managing PDF files. If you need to process PDF documents as part of your custom data for training ChatGPT, you can install PyPDF2 using Pip:

Gradio:

Gradio is a library that enables you to quickly create customizable UI components for machine learning models. You can use it to build interactive interfaces for ChatGPT by inputting text and receiving responses. You can install it using Pip:

After installing these libraries, you can incorporate them into your Python code to train ChatGPT on your custom data. OpenAI provides documentation and examples for using their API. GPT Index, PyPDF2, and Gradio have their own documentation and resources for learning how to use them effectively in your projects.

Prepare custom data

Begin by pinpointing valuable sources for data collection. These may include customer interactions, chat logs, support tickets, domain-specific documents, or blog posts. Your aim is to compile a wide range of conversational examples that encompass various topics, situations, and user intentions.

When it comes to collecting data, remember to always adhere to ethical standards to guarantee user privacy. Ensure diligent anonymization or elimination of any personally identifiable information (PII) to protect user privacy and comply with privacy regulations.

To prepare and format custom data for training ChatGPT, you can follow these steps:

Create a directory:

Start by creating a directory on your computer to store your custom data files. This directory is supposed to contain all your text training data. You can name the directory something descriptive, like “custom_data”.

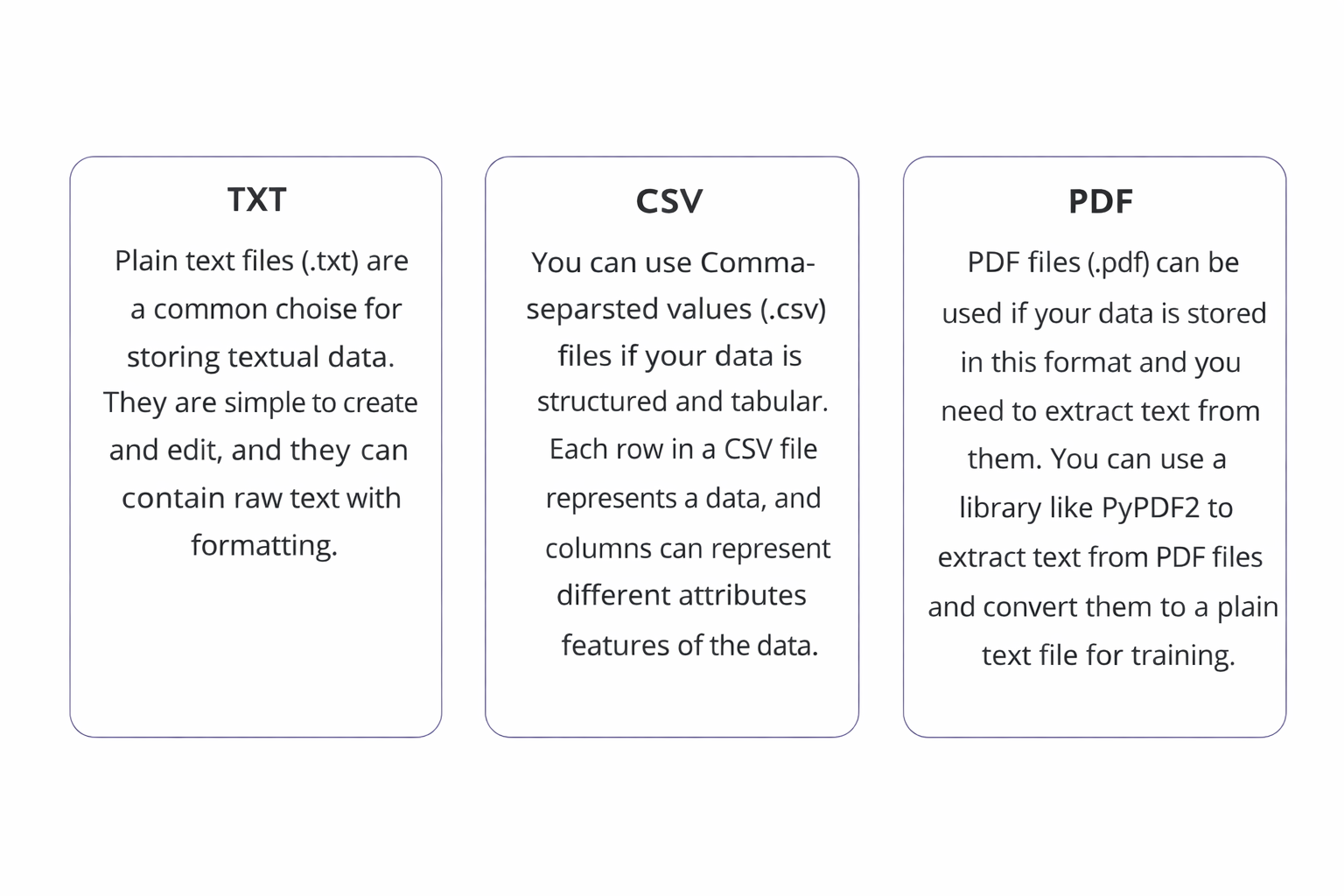

Choose the right file types:

The choice of file types depends on the nature of your data and your preferences:

Format your data:

Regardless of the file type, it’s important to format your data properly for training. Here are some key considerations:

-

Clean the data: Ensure that your data is clean and free of any irrelevant or noisy text. Remove any formatting, special characters, or unwanted symbols that might interfere with the training process.

-

Organize the data: If you have your data in CSV format, make sure it is well-structured and has clear headers for each of the columns. For text files, organize your data into separate files if you have different categories or topics you want to train on.

-

Preprocess the text: Depending on your specific needs, you may need to preprocess the text data. This may involve tasks such as tokenization, lemmatization, stemming, and stop word removal. The preprocessing steps you choose will vary based on your data’s characteristics and the needs of your training process.

Case Study: Real-Time Asset Visualization Tool

Scripting and training process

At first, use a text editor or an integrated development environment (IDE) like PyCharm or VSCode to write a new Python script (e.g., train_chatgpt.py). In this script, you’ll create the code to train ChatGPT using your custom data. This is what your script may look like:

Replace “YOUR_API_KEY_HERE” with an OpenAI API key that you generated. The “custom_data” variable should contain your custom data formatted as a string.

Then run your Python script in the terminal. To do this, head to the directory that contains your saved script by using the cd command. Then, run the script using the Python command:

Running this script will send your custom data to OpenAI’s GPT API for training. The API will process the data and update the model’s parameters based on the information you provided. The trained model will be capable of generating responses by employing the patterns and info acquired from your custom data. The training process may take some time depending on the size and complexity of your data. After the training process is done, you can use the trained model to create responses to new input text.

Embedding and querying data

To embed text and load embeddings to a vector store for training ChatGPT, you can follow these steps.

Embedding text:

Text embedding is the process of converting text into numerical representations (vectors) that capture the semantic meaning of the text. You may use pre-trained models, such as GPT-3 to generate embeddings for your text data. Here’s an example of how a text can be embedded using OpenAI’s GPT-3 API:

Replace “YOUR_API_KEY_HERE” with your newly generated OpenAI API key. The text variable should contain the text you want to embed. The embeddings variable will contain the numerical representations of the text generated by GPT-3.

Loading embeddings to a vector store:

Once you have the embeddings for your text data, you can load them into a vector store. A vector store is a database that stores vector representations of data for efficient retrieval and querying. You can use libraries like faiss or annoy in Python to create and manage vector stores. Here’s an example of how to load embeddings into a vector store using faiss:

In this example, “vectorstore.index” is the file where the vector store will be saved. You can load this file later to query the vector store.

Querying data

Once you have loaded the embeddings into a vector store, you can query the vector store to retrieve similar embeddings based on a given query. For instance, if you want to combine chat history with new questions to generate responses, you can embed the chat history and the new questions, and then query the vector store to find the most similar embeddings to the combined input. Here’s a simplified example:

In this example, “k” specifies the number of similar embeddings to retrieve. The distances variable contains the distances between the query embedding and the retrieved embeddings, while the indices variable contains the indices of the most similar embeddings in the vector store. You can use these indices to retrieve the actual embeddings from the embeddings list.

Testing and deployment

To test a trained ChatGPT model using a simple user interface, you can use the Gradio library, which allows you to create interactive interfaces for machine learning models. Here’s how you can do this:

- Write a Python script to create a simple user interface for your ChatGPT model using Gradio. Here’s the way:

Replace “YOUR_API_KEY_HERE” with your generated OpenAI API key. This script creates a simple text box where you can input text, and it will display the response generated by your trained ChatGPT model.

-

Use your terminal or command prompt to launch the Python script. This will run the Gradio interface, enabling you to manage your ChatGPT model.

-

Enter text into the input box and press Enter. The ChatGPT model will generate a response based on the input text, which will be displayed in the output box.

Deployment considerations and best practices

-

Scalability: Consider the scalability of your deployment. If you expect a large number of users, you may need to use cloud services or distributed systems to handle the load.

-

Security: Ensure that your deployment is secure, especially when dealing with sensitive data. Use HTTPS, authentication mechanisms, and other security best practices.

-

Monitoring and logging: Monitoring and logging can be used to track the performance of your ChatGPT model in production. With these, any occurring issues can be quickly identified and fixed.

-

Model updates: Plan for regular updates to your ChatGPT model to keep it up to date with new data and improvements. This may require retraining the model from time to time.

-

User experience: Focus on providing a smooth and intuitive user experience in your interface. Consider user feedback and refine your design to improve usability.

Think about Turning to No-Code Tools

No-code solutions like Botsonic provide a user-friendly interface for creating, training, and deploying chatbots with ChatGPT. Their advantage is that no coding process is needed.

Botsonic provides an intuitive interface for designing conversational flows, training AI models, and integrating chatbots with various messaging channels and websites. The tool makes the whole process of building chatbots simpler by abstracting away the technical complexities. While the tool does all the heavy lifting, you are free to focus on crafting engaging conversational interactions for your users.

With Botsonic, you can create AI-powered chatbots using technologies like OpenAI’s GPT-3 without writing a single line of code. This, indeed, makes it accessible to a wide range of users, including those without programming backgrounds.

Here’s how these tools simplify the process:

-

Conversation design: You can visually design conversational flows by arranging components like messages, buttons, and user inputs on a canvas. This allows for an effortless creation of complex chatbot interactions.

-

Natural Language Understanding (NLU): No-code platforms like Botsonic offer built-in NLU capabilities to understand user inputs and extract intents and entities. You can define custom intents and train the NLU model without coding expertise.

-

Customization: You can customize chatbot responses by adding dynamic content, such as user inputs or data from external sources. This allows for personalized responses tailored to your unique context.

-

Data import: You can upload your own datasets or documents directly to the platform. The platform then processes the data, extracts relevant information, and uses it to train your chatbot’s AI model.

-

Training and deployment: No-code platforms, such as Botsonic, handle the training of the AI model behind the scenes. You can initiate the training process with just a few clicks, and the platform optimizes the model based on the provided data.

-

Integration: These platforms provide integrations with different messaging services, including Facebook Messenger and Slack, as well as website plugins. This allows you to deploy your chatbot across multiple platforms without resorting to coding.

-

Analytics and monitoring: You can track your chatbot performance through built-in analytics tools. These tools monitor user interactions, track key metrics, and make data-driven decisions to improve your chatbot.

Let’s create an exceptional software solution together

Challenges and Best Practices

Training ChatGPT may have some tricky parts. Here are some common challenges and best practices to overcome them:

Data quality

-

Challenge: Low-quality or biased data can lead to inaccurate or inappropriate responses.

-

Way to overcome: Use good data that covers lots of topics and is free from errors or biases. Keep your data clean by removing any mistakes or inconsistencies.

Data quantity

-

Challenge: Insufficient data can lead to overfitting and poor generalization.

-

Way to overcome: Make sure you have plenty of data to train on. If you don’t have enough, consider obtaining additional data from various sources. You can also generate more data by mixing and matching the existing content.

Finding the right settings

-

Challenge: Choosing the right parameters, such as learning rate, model architecture, and batch size is vital for effective training.

-

Way to overcome: Experiment with different settings like how fast the model learns and how much data it looks at at once. Try different combinations to decide on the one that proves to work best for your case.

Computing power

-

Challenge: You need substantial computational resources to train large-scale language models, such as ChatGPT. This includes powerful GPUs or TPUs and ample memory.

-

Way to overcome: If you don’t have a powerful computer, you can use cloud services. You can also optimize your training pipeline by using techniques like distributed training or model parallelism.

Fine-tuning

-

Challenge: Fine-tuning ChatGPT on custom data requires careful planning and execution.

-

Way to overcome: Define clear goals for your fine-tuning task and choose suitable evaluation metrics to measure performance. Employ techniques such as early stopping and learning rate scheduling to avoid overfitting and achieve convergence.

Ethical considerations

-

Challenge: Training AI models like ChatGPT comes with ethical responsibilities, including mitigating biases, ensuring privacy and security, and promoting responsible use.

-

Way to overcome: Use fairness-aware training techniques to reduce biases in your model. Anonymization and encryption are among the best practices you can stick with to provide data privacy and security. Educate users about the limitations and ethical considerations of AI-generated content.

Why Consider Hiring AI Developers

Hiring AI developers to help you train ChatGPT on your own data can be a smart move in certain situations. Here’s when it makes sense and why:

-

Complex projects: Some projects may require advanced AI expertise or involve complex tasks like natural language processing (NLP) or large-scale data processing. Thus, by hiring expert AI developers you can be absolutely confident that your project is handled with the necessary expertise and attention to detail.

-

Custom solutions: If you need a highly custom solution, AI developers can work with you to understand your requirements and develop one that meets your goals.

-

Technical challenges: Training AI models like ChatGPT can pose technical challenges, especially when handling extensive datasets or complex algorithms. AI specialists have the skills and experience to navigate these challenges effectively.

-

Efficiency and quality: Hiring AI developers can help achieve faster and higher-quality results. They are well-versed in best practices and can optimize the training process to ensure the best performance.

-

Long-term support: AI developers can provide ongoing support and maintenance for your AI chatbot, ensuring that it continues to perform well and adapts to changing requirements over time.

Summing Up

Training ChatGPT on your own data is like crafting a custom-made tool. Start by gathering a mix of data that covers all the topics you want your chatbot to understand. Clean and organize your data to make sure it’s in top shape. Experiment with various services and settings to discover the most effective method for training your chatbot. Fine-tune your model by adjusting it until it works just right. And always keep ethical guidelines in mind.

In case you need extra power, consider reaching out to professional AI developers. The Requestum specialists are armed with real-life experience in a wide range of AI technologies. With our expertise, we can help you train ChatGPT on your data effectively and efficiently, delivering personalized solutions that meet your company’s needs.

Frequently Asked Questions

Yes. Even without technical expertise, it’s possible to do so with the help of straightforward tools and clear setup guides. The process of training ChatGPT has become much more accessible, allowing teams to adapt the model to their workflows and communication style.

A variety of text-based materials can be used for custom GPT training. The list includes FAQs, knowledge base articles, support messages, internal documentation, and chat logs. Using content that reflects your company’s expertise helps the model generate more accurate and relevant responses.

Start with information that represents how your business interacts with clients or handles common inquiries. For example, previous customer conversations, helpful responses, and team documentation can all strengthen training ChatGPT for specific use cases. The better the data quality, the more consistent the results.

The duration depends on the amount and structure of your dataset. In general, custom GPT training may take anywhere from a few hours to a couple of days. When you decide to train your own model with larger or more complex data, expect the process to take slightly longer.

Before you begin training ChatGPT, review your dataset carefully. Removing duplicate, outdated, or irrelevant information helps the system learn more effectively. Clean, well-prepared data ensures that your custom GPT training produces reliable, accurate, and context-aware responses.

Our team is dedicated to delivering high-quality services and achieving results that exceed clients' expectations. Let’s discuss how we can help your business succeed.

SHARE: